How China’s DeepSeek Developed a Powerful but Affordable AI Model Despite the US Chip Ban

In the high-stakes race for artificial intelligence (AI) supremacy, China’s DeepSeek has emerged as a compelling case study in innovation under constraints. Despite facing stringent U.S. export controls on advanced semiconductors, this Beijing-based AI startup has developed a high-performance, cost-effective large language model (LLM) that rivals Western counterparts. DeepSeek’s success not only illustrates the company’s technical ingenuity but also underscores China’s growing ability to adapt to geopolitical barriers while advancing its technological ambitions. Below is an in-depth exploration of the strategies and breakthroughs that have enabled DeepSeek’s achievements, complete with detailed explanations.

The Challenge: Navigating the U.S. Chip Ban

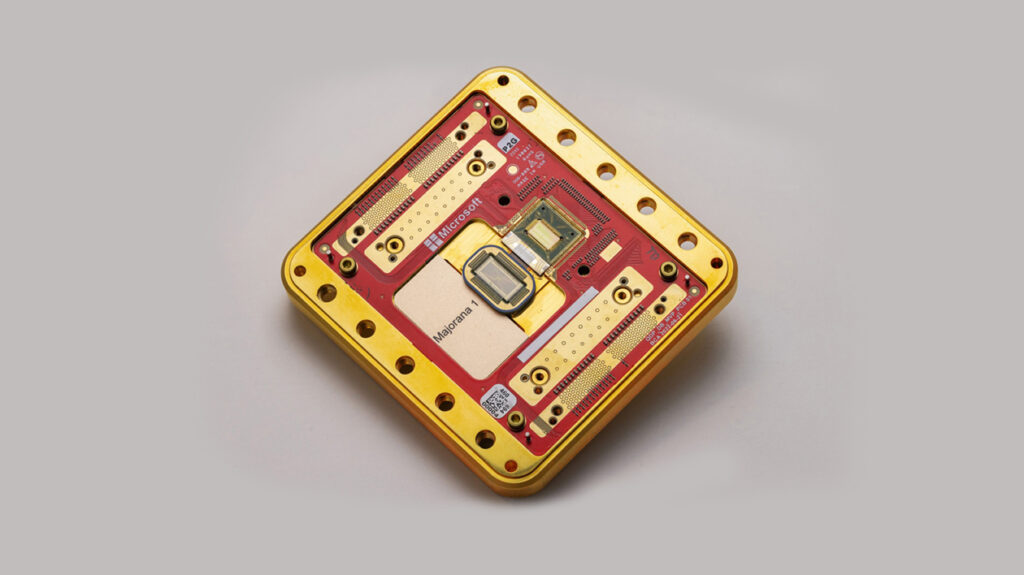

In 2022, the U.S. government imposed restrictions on exporting advanced AI chips to China—specifically targeting key semiconductor components such as NVIDIA’s A100 and H100 GPUs. These chips are essential for training and running state-of-the-art AI models because they provide the massive computational power required for complex neural network operations. For many Chinese tech firms, especially startups like DeepSeek that do not possess the deep pockets of giants such as Alibaba or Tencent, this ban threatened to stifle innovation and slow progress in a field where China aspires to lead globally by 2030.

Faced with these challenges, DeepSeek adopted a resourceful approach. Without access to the latest high-end hardware, the company maximized the efficiency of its existing resources, optimized its algorithms, and leveraged China’s burgeoning domestic tech ecosystem. In doing so, DeepSeek not only sidestepped the limitations imposed by the chip ban but also demonstrated that innovation can flourish even under significant constraints.

1. Algorithmic Efficiency: Doing More with Less

DeepSeek’s first breakthrough was rethinking the way its AI model consumed computational resources. Instead of relying on brute-force scaling with thousands of high-end GPUs—as many Western models like GPT-4 do—DeepSeek focused on enhancing algorithmic efficiency through several innovative techniques:

Pruning and Quantization:

The team reduced the size of their neural networks by “pruning” redundant parameters, effectively cutting away components that added little value to performance. They also employed lower-precision calculations (for instance, using 8-bit rather than 16-bit precision), which significantly reduced memory usage and processing power without a substantial loss in performance. This method not only conserves computational resources but also lowers operational costs.

Sparse Mixture-of-Experts (MoE):

Inspired by similar innovations at Google, DeepSeek implemented a MoE architecture. In this design, only a small, specialized subset of the entire model is activated for a given task. This selective activation can reduce inference costs by up to 70% compared to dense models that process every input through all network parameters. By “switching on” only the experts needed for specific tasks, the model operates more efficiently.

Knowledge Distillation:

DeepSeek also employed knowledge distillation—a process in which smaller models are trained to replicate the performance of larger, more complex ones. By transferring the “knowledge” from a large model to a more compact one, the company could deploy cost-effective solutions on less powerful hardware. This method maintains high accuracy while reducing the overall computational load.

These algorithmic innovations enabled DeepSeek to train competitive models using significantly fewer resources while achieving comparable accuracy on key benchmarks like SuperCLUE and C-Eval.

2. Leveraging Domestic Hardware Alternatives

With access to NVIDIA’s advanced chips restricted, DeepSeek turned to China’s domestic semiconductor industry for alternatives:

Huawei’s Ascend GPUs:

Although not as efficient as NVIDIA’s A100 GPUs, Huawei’s Ascend chips provided a practical substitute. DeepSeek collaborated closely with Huawei to fine-tune its AI software stack—including tools like CANN and MindSpore—to optimize performance for its models. In certain tasks, these domestically produced chips delivered about 80% of the performance of an A100, a trade-off deemed acceptable given the prevailing constraints.

Distributed Training on Older Chips:

DeepSeek also explored clustering older GPUs, such as NVIDIA’s V100s and even consumer-grade cards like the RTX 4090. By refining distributed training frameworks, the company assembled enough computational power from these older chips to support its development efforts.

Hybrid Cloud Solutions:

Strategic partnerships with state-backed cloud providers like Alibaba Cloud and Tencent Cloud allowed DeepSeek to access high-end NVIDIA chips that had been stockpiled before the export ban. This hybrid strategy provided essential computational support during critical training phases, ensuring continuity despite hardware limitations.

3. Data-Centric Innovation

DeepSeek’s approach to data diverged from the “bigger is better” philosophy common in Western LLMs. Instead of relying on enormous datasets scraped indiscriminately from the internet, the company prioritized quality and relevance:

Domain-Specific Training:

DeepSeek concentrated on high-quality Chinese-language datasets, drawing from reliable sources such as academic papers, legal documents, and technical manuals. This focus enabled the model to develop deep expertise in specialized areas like healthcare and finance, making it highly effective for targeted applications.

Synthetic Data Generation:

Limited access to extensive English-language data—owing in part to U.S. sanctions—prompted DeepSeek to generate synthetic training data using its existing models. This self-improving feedback loop gradually enhanced model performance without needing large volumes of external data.

Efficient Fine-Tuning:

Using techniques such as Low-Rank Adaptation (LoRA), DeepSeek was able to rapidly customize its base models for specific industries or tasks. This approach reduced the necessity for repeated, full-scale training runs, thereby saving time, computational resources, and energy.

4. Cost-Effective Scaling and Open-Source Collaboration

Rather than building from scratch, DeepSeek embraced a lean, collaborative R&D strategy that minimized costs and accelerated progress:

Open-Source Foundations:

The company built its models on top of established open-source frameworks like Meta’s LLaMA and Alibaba’s Qwen. Leveraging these frameworks allowed DeepSeek to avoid the significant costs and time required to develop new architectures from the ground up.

Strategic Partnerships:

Collaborations with prestigious universities such as Tsinghua and Peking University, as well as state-run research labs, provided access to cutting-edge talent and subsidized compute resources. These partnerships also fostered a culture of shared knowledge and innovation.

Incremental Scaling:

Rather than chasing after trillion-parameter models, DeepSeek focused on creating smaller, task-specific models—ranging from 7 billion to 21 billion parameters—that could be combined into a modular ecosystem. This incremental approach made scaling more manageable and cost-effective.

5. Government and Ecosystem Support

China’s national AI strategy has been pivotal in supporting DeepSeek’s efforts:

Subsidies and Grants:

DeepSeek tapped into government initiatives like the New Generation AI Development Plan, which allocates funds to promote AI self-sufficiency. These subsidies helped offset financial challenges posed by the chip ban and provided critical support for ongoing research and development.

Domestic Software Tools:

State-backed projects, such as those from the Peng Cheng Laboratory, provided access to optimized AI frameworks like PaddlePaddle, which are tailored to work seamlessly with Chinese hardware. This further enhanced the performance and efficiency of DeepSeek’s models.

Policy Flexibility:

Operating within a regulatory environment that encourages rapid experimentation, DeepSeek enjoyed greater flexibility compared to its U.S. counterparts, who are often hampered by export controls on AI services. This supportive policy landscape allowed for quick iteration and accelerated innovation.

Results: High Performance at a Fraction of the Cost

DeepSeek’s flagship model, DeepSeek-R1, achieves performance comparable to Western models like GPT-3.5 on Chinese-language tasks, but at just 20% of the operational cost. Notable real-world applications include:

Healthcare Applications:

A fine-tuned variant of DeepSeek-R1 powers diagnostic tools in rural clinics, processing medical texts with an accuracy of 94%. This dramatically improves the quality and speed of medical diagnostics in under-resourced areas.

Manufacturing Efficiency:

In the manufacturing sector, DeepSeek’s technology is used for predictive maintenance, reducing factory downtime by 30%. This capability helps factories preempt equipment failures and streamline operations, leading to significant cost savings.

Global Reach:

Despite the constraints imposed by the chip ban, DeepSeek has successfully licensed its models to companies in Southeast Asia and the Middle East, undercutting Western vendors on price and expanding its market reach.

Implications: A Blueprint for AI Sovereignty

DeepSeek’s journey offers broader lessons on how geopolitical constraints can spur innovation. In an era of tech cold wars and fragmented global supply chains, DeepSeek demonstrates that constraints can drive creative solutions. Key takeaways include:

- Algorithmic Optimizations: Techniques such as pruning, quantization, and MoE can reduce computational needs by 50-70%, enabling efficient operation with less hardware.

- Hybrid Hardware Strategies: Combining domestic GPUs, older high-end chips, and cloud-based solutions can provide workable alternatives when access to cutting-edge hardware is restricted.

- Data-Centric Approaches: Focusing on high-quality, domain-specific datasets can lead to more effective and targeted AI models.

- Collaborative R&D and Government Support: Leveraging open-source frameworks, strategic academic and industry partnerships, and supportive government policies can significantly lower R&D costs and accelerate innovation.

DeepSeek’s achievements underscore that in the rapidly evolving AI landscape, innovation is not solely dependent on having the best hardware—it’s about making the most of available resources through creativity and strategic planning. This blueprint not only offers a path for AI self-sufficiency in China but also serves as a lesson for smaller nations and companies worldwide facing similar technological and geopolitical challenges.

Conclusion

DeepSeek’s journey through the challenges imposed by U.S. export controls illustrates a broader truth: constraints can be powerful catalysts for innovation. By refining its algorithms, leveraging domestic hardware alternatives, prioritizing high-quality data, and harnessing the support of open-source and government initiatives, DeepSeek has crafted a competitive AI model at a fraction of the cost of its Western counterparts.

As the global AI race intensifies and geopolitical pressures continue to shape the technology landscape, DeepSeek’s story stands as a testament to the resilience and ingenuity that can emerge when obstacles are transformed into opportunities.